Today’s AI tools can summarize feedback, but is summarization enough to truly understand customers in a way that’s impactful for the business?

From my experience building data-science models and AI over the past few years and finally, experimenting with publicly available LLMs, most of them fall apart when we ask deeper, product-critical questions.

Here’s why most LLM-based approaches fall short:

LLMs are trained on language - not structured data.

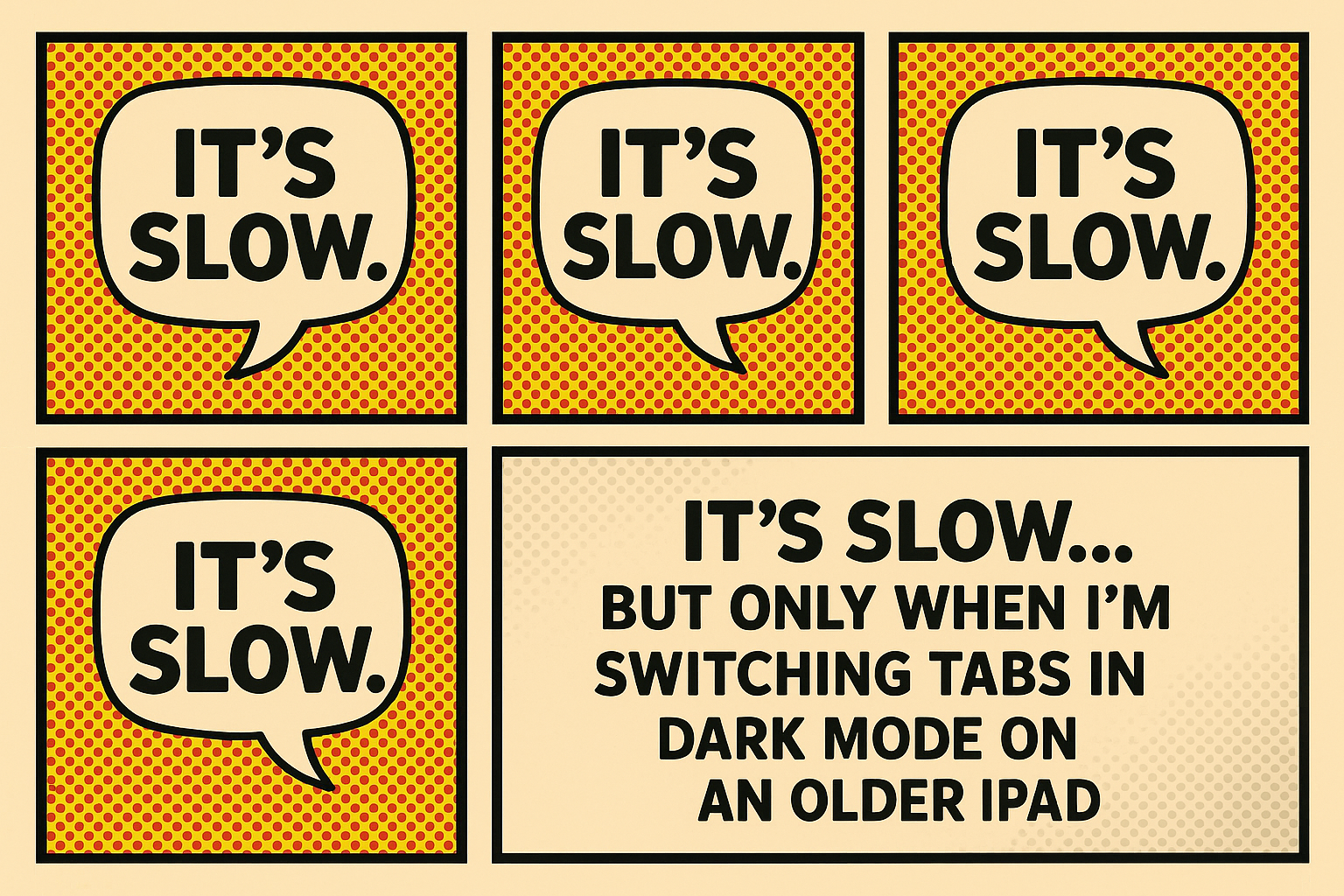

They can describe patterns, but they don’t natively reason over metrics like funnel conversion, adoption curves, or engagement deltas. Ask them to correlate qualitative themes with behavioral trends - say, feature complaints with actual usage drop-off, and they fall short. This brings us back to the classic Achilles’ heel of customer research: you risk being swayed by the loudest or most recent voices.

A thorough benchmark from 2023 examining LLMs' performance on tabular and structured data reasoning demonstrates that most leading models, including GPT-4, show significant deficiencies when reasoning over realistic, complex tables. Performance drops further in the presence of missing values, duplicate entries, or structural variations common in real-world data. These results indicate a persistent gap in the ability of LLMs to robustly handle structured input without external tools or grounding in structured data sources. (Kalo et al., 2024).

I investigated this topic deeper on a previous blog post.

Large Language Models (LLMs) are fundamentally trained to predict the next most likely token based on observed patterns in data. This architectural focus makes them highly effective at capturing and echoing what is most frequently said within a dataset—but not necessarily what is most relevant or important.

Recent research underscores this limitation. Jia et al. (2024) observe that “Large Language Models (LLMs) are excellent pattern matchers… they recognize patterns from the input text, drawing from their vast training, and produce outputs.” However, they caution that while such patterns are computationally efficient and easily discoverable through gradient descent and attention mechanisms, they are “inherently unreliable on their own.” This highlights a persistent gap between surface-level pattern recognition and the kind of deep, analytic reasoning required to interpret complex, real-world data.

LLMs are stateless by default. They don’t persist history across sessions. They don’t track individuals over time. This means they can’t tell you what’s changed in opinions over the past two months, how newly onboarded users behave differently from long-term power users, or whether frustration around a feature is rising or fading.

Unless you’ve explicitly built a pipeline that embeds time-awareness, cohort tracking, and user-level metadata, your LLM is working blind.

As Qiu et al. (2024) highlight, large language models (LLMs) consistently lag behind both human judgment and smaller, specialized models—particularly when it comes to maintaining self-consistency. In their study, LLMs produced incoherent or contradictory outputs in over 27% of cases. The authors conclude that “current LLMs lack a consistent temporal model of textual narratives,” underscoring a key structural limitation in how these models process evolving information.

I’m not arguing that research should slow down. In fact, for it to have any real impact, it needs to move faster than ever, keeping pace with, or even outpacing, product development. But speed without clarity is noise. AI should help us analyze more intelligently, connecting signals across time, behavior, and context, not just summarize what was said most often.

We need systems built for real-world decisions. That means going beyond plug-and-play LLMs to architectures that surface insights grounded in business logic, structured data, and real usage patterns (P.S. that’s what we’re building at Riley).

Don’t settle for faster noise. Aim for faster truth.

Claudia is the CEO & Co-Founder of Riley AI. Prior to founding Riley AI, Claudia led product, research, and data science teams across the Enterprise and Financial Technology space. Her product strategies led to a $5B total valuation, a successful international acquisition, and scaled organizations to multi-million dollars in revenue. Claudia is passionate about making data-driven strategies collaborative and accessible to every single organization.Claudia completed her MBA and Bachelor degrees at the University of California, Berkeley.